If your taste in TV is anything like mine, then most of your familiarity with what analog computing looks like probably comes from the backdrops of Columbo. Since digital took over the world, analog has been sidelined into what seems like a niche interest at best. But this retro approach to computing, much like space operas, is both making a comeback, and also something that never really left in the first place.

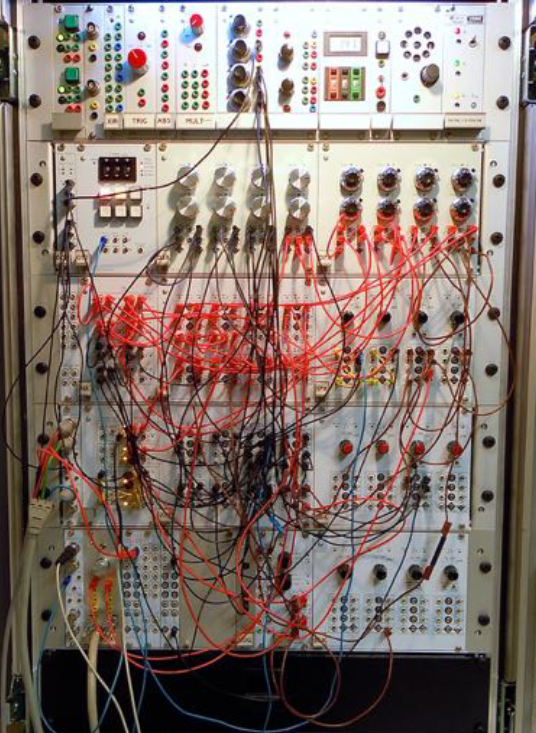

I found this out for myself about a year ago, when a video from Veritasium sparked my curiosity about analog computing. After that, I started to read a few articles here and there, and I gotta say…it broke my brain a bit. What I really wanted to know, though, was this: How can analog computing impact our daily lives? And what will that look like? Because I definitely don’t have room in my house for this.

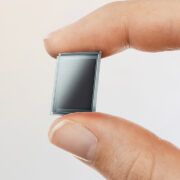

Depending on how old you are, you may remember when it was the norm for a single computer to take up more square footage than your average New York City apartment. But after the end of the Space Age and the advent of personal computers, our devices have only gotten smaller and smaller. Some proponents of analog computing argue that we might just be reaching our limits when it comes to how much further we can shrink. We’ll get to that in a bit, though. Emphasis on bits.

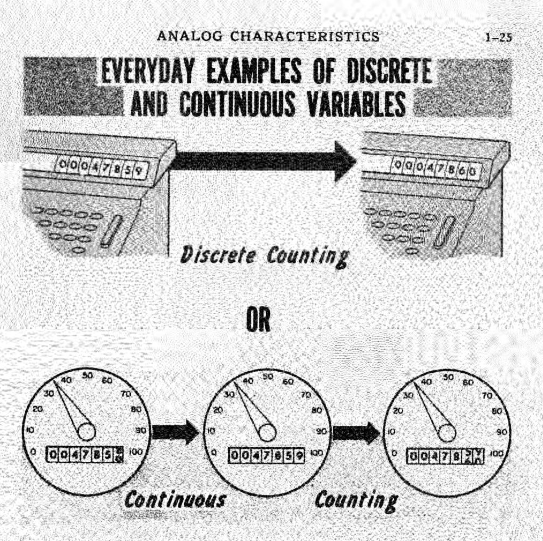

Speaking of bits, this brings us to the fundamental difference between analog and digital. Analog systems have an infinite number of states. If I were to heat this room from 68 F to 72 F, the temperature would pass through an infinite set of numbers, including 68.0000001 F and so on. Digital systems are reliant on the number of “bits” or the number of transistors that are switched either on or off. As an example, an 8-bit system has 28, or 256 states. That means it can only represent 256 different numbers.

So, size isn’t the only aspect of the technological zeitgeist that’s changed. Digital computers solve problems in a fundamentally different way from analog ones. That’s led to some pretty amazing stuff in modern day…at a cost. Immensely energy intensive computing is becoming increasingly popular. Just look at cryptocurrencies and AI. According to a report released last year by Swedish telecommunications company Ericsson, the information and communication technology sector accounted for roughly 4% of global energy consumption in 2020.1

Plus, a significant amount of digital computing is not the kind you can take to go. Just among the thousands of data centers located across the globe, the average campus size is approximately 100,000 square feet (or over 9,000 square meters). That’s more than two acres of land. Some of these properties, like The Citadel in Nevada, stretch out over millions of square feet.2 Keeping all this equipment online, much less cooling it, takes gigawatts of electricity.3

Then, when you consider the energy cost of just training the large language models

(or LLMs) that form the backbone of new and trendy AI programs like ChatGPT — which can suck up about as much power as 130 U.S. homes use in a year — the implications get real dizzying real fast.4 Again, that’s an approximation of how much energy is spent during training. That doesn’t account for the actual use of the models once they’re created. Data scientist Alex de Vries estimates that a single interaction with a LLM is equivalent to “leaving a low-brightness LED lightbulb on for one hour.”5

But as the especially power-hungry data centers, neural networks, and cryptocurrencies of the world continue to grow in scale and complexity…we still have to reckon with the climate crisis. Energy efficiency isn’t just good for the planet, it’s good for the wallet. A return to analog computing could be part of the solution. The reason why is simple: you can accomplish the same tasks as you would on a digital setup for a fraction of the energy. In some cases, analog computing is as much as 1,000 times more efficient than its digital counterparts.67

To understand how that works, exactly, we first need to establish what makes analog computing…analog. The same way you would make a comparison with words using an analogy, analog computers operate using a physical model that corresponds to the values of the problem being solved. And yeah, I did just make up an analog analogy.

A classic example of analog computing is the Monetary National Income Analogue Computer, or MONIAC, which economist Bill Phillips created in 1949. MONIAC had a single purpose: to simulate the Great British economy on a macro level. Within the machine, water represented money as it literally flowed in and out of the treasury.8 Phillips determined alongside his colleague Walter Newlyn that the computer could function with an approximate accuracy of ±2%. And of the 14 or so machines that were made, you can still find the first churning away at the Reserve Bank Museum in New Zealand.9

It’s safe to say that the MONIAC worked (and continues to work) well. The same goes for other types of analog computers, from those on the simpler end of the spectrum, like the pocket-sized mechanical calculators known as slide rules, to the behemoth tide-predicting machines invented by Lord Kelvin.1011

In general, it was never that analog computing didn’t do its job — quite the opposite. Pilots still use flight computers, a form of slide rule, to perform calculations by hand, no juice necessary.12 But for more generalized applications, digital devices just provide a level of convenience that analog couldn’t. Incredible computing power has effectively become mundane. [we could also talk about the fact that the SR-71 Blackbird was designed entirely with slide rules.]

To put things into perspective, an iPhone 14 contains a processor that runs somewhere above 3 GHz, depending on the model.13 The Apollo Guidance Computer, itself a digital device onboard the spacecraft that first graced the moon’s surface, ran at…0.043 MHz. As computer science professor Graham Kendall once wrote, “the iPhone in your pocket has over 100,000 times the processing power of the computer that landed man on the moon 50 years ago.”14 … and we use it to look at cat videos and argue with strangers.

In any case, that ease of use is one of the reasons why the likes of slide rules and abacuses were relegated to museum displays while electronic calculators reigned king. So much for “ruling.” But, while digital has a lot to offer, like anything else, it has its limits. And mathematician and self-described “analog computer evangelist” Bernd Ulmann argues that we can’t push those limits much further. In his words:

“Digital computers are hitting basic physical boundaries by now. Computing elements cannot be shrunk much more than today, and there is no way to spend even more energy on energy-hungry CPU chips today.”15

It’s worth noting here that Ulmann said this in 2021, years ahead of the explosion of improvements in generative AI we’ve witnessed these past few months, like OpenAI’s text-prompt-to-video model, Sora.

But what did he mean by “physical boundaries”? Well…digital computing is starting to bump up against the law. No, not that kind…the scientific kind. There’s actually a few that are at play here. We’ve already started talking about the relationship between digital computing and size, so let’s continue down that track.

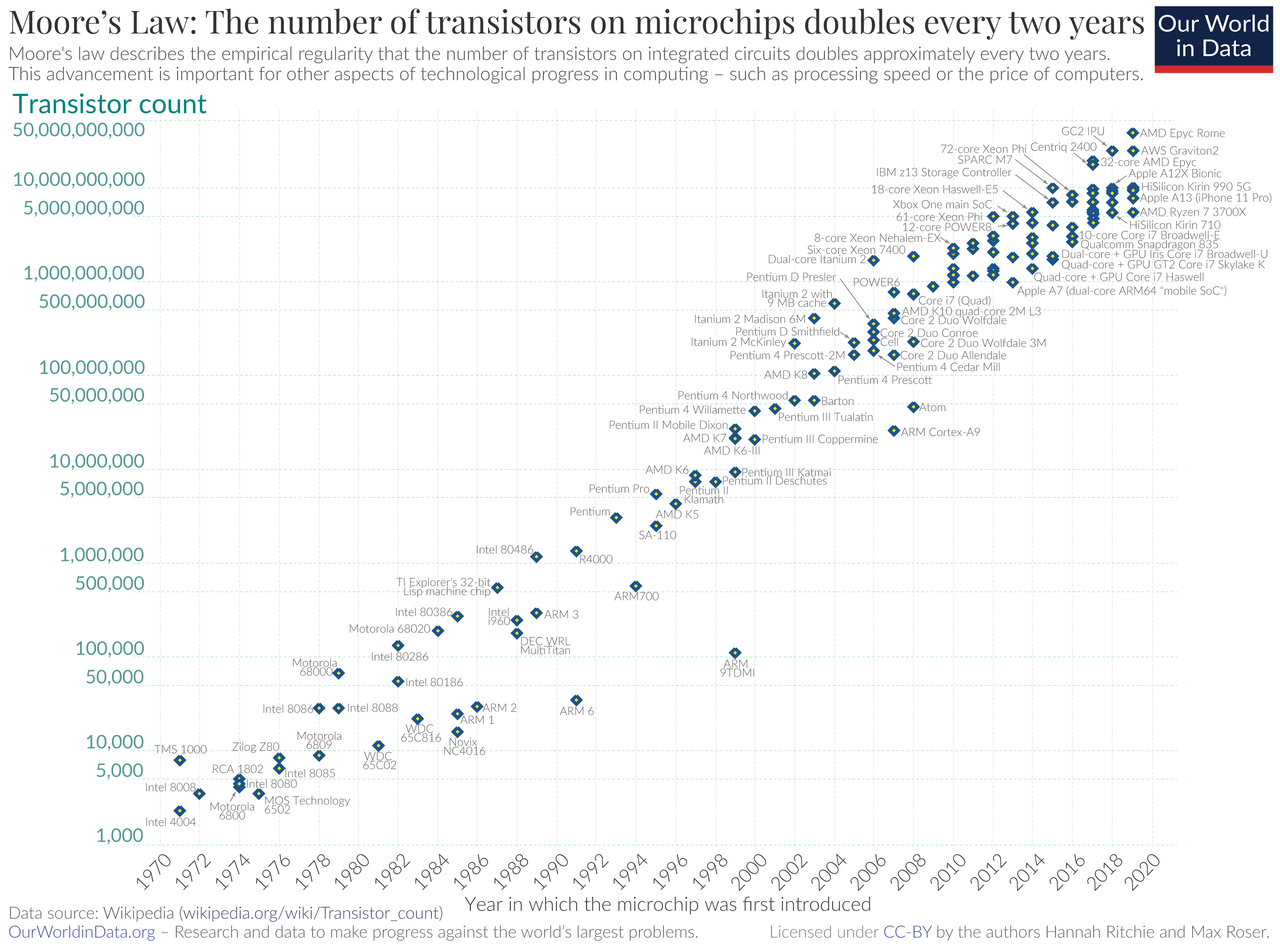

In a 1965 paper, Gordon Moore, co-founder of Intel, made a prediction that would come to be known as “Moore’s Law.” He foresaw that the number of transistors on an integrated circuit would double every year for the next 10 years, with a negligible rise in cost. And 10 years later, Moore changed his prediction to a doubling every two years.16

As Intel clarifies, Moore’s Law isn’t a scientific observation, and Moore actually isn’t too keen on his work being referred to as a “law.” However, the prediction has more or less stayed true as Intel (and other semiconductor companies) have hailed it as a goal to strive for: more and more transistors on smaller and smaller chips, for less and less money.16

Here’s the problem. What happens when we can’t make a computer chip any smaller? According to Intel, despite the warnings of experts in the past several decades, we’ve yet to hit that wall.17 We can take it straight from Moore himself, though, that an end to the standard set by his law is inevitable. When asked about the longevity of his prediction during a 2005 interview, he said this:

“The fact that materials are made of atoms is the fundamental limitation and it’s not that far away. You can take an electron micrograph from some of these pictures of some of these devices, and you can see the individual atoms of the layers. The gate insulator in the most advanced transistors is only about three molecular layers thick…We’re pushing up against some fairly fundamental limits, so one of these days we’re going to have to stop making things smaller.”18

Not to mention, the more components you cram onto a chip, the hotter it becomes during use, and the more difficult it is to cool down.19 It’s simply not possible to use all the transistors on a chip simultaneously without risking a meltdown.20 This is also a critical problem in data centers, because it’s not only electricity use that represents a huge resource sink. Larger sites that use liquid as coolant rely on massive amounts of water a day — think upwards of millions of gallons.21 In fact, Google’s data centers in The Dalles, Oregon, account for over a quarter of the city’s water use.22

On top of this, bacteria tends to really like the warm water circulating in cooling towers, including legionella, which can lead to a deadly form of pneumonia. Preventing outbreaks is another frighteningly big problem unto itself that requires careful management.23 Meanwhile, emerging research on new approaches to analog computing has led to the development of materials that don’t need cooling facilities at all.24

Then there’s another law that stymies the design of digital computers: Amdahl’s law. And you might be able to get a sense of why it’s relevant just by looking at your wrist. Or your wall. Analog clocks, the kind with faces, can easily show us more advantages of analog computing. When the hands move forward on a clock, they do so in one continuous movement, the same way analog computing occurs in real time, with mathematically continuous data. But when you look at a digital clock, you’ll notice that it updates its display in steps. That’s because, unlike with analog devices, digital information is discrete. It’s something that you count, rather than measure, hence the binary format of 0s and 1s.2526

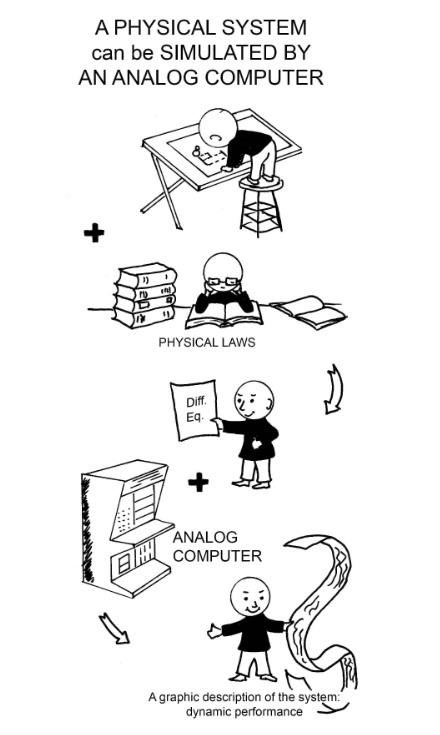

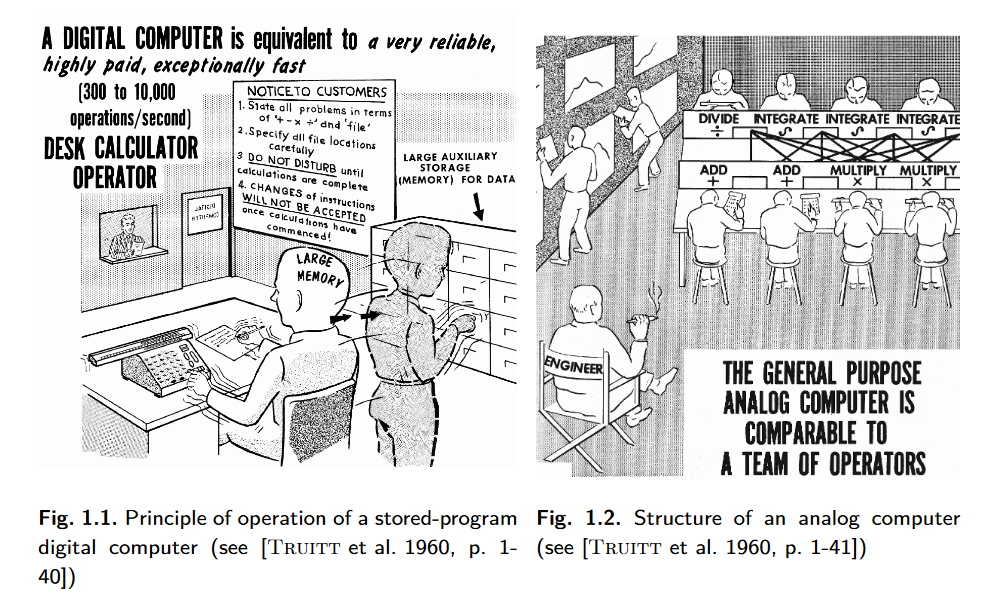

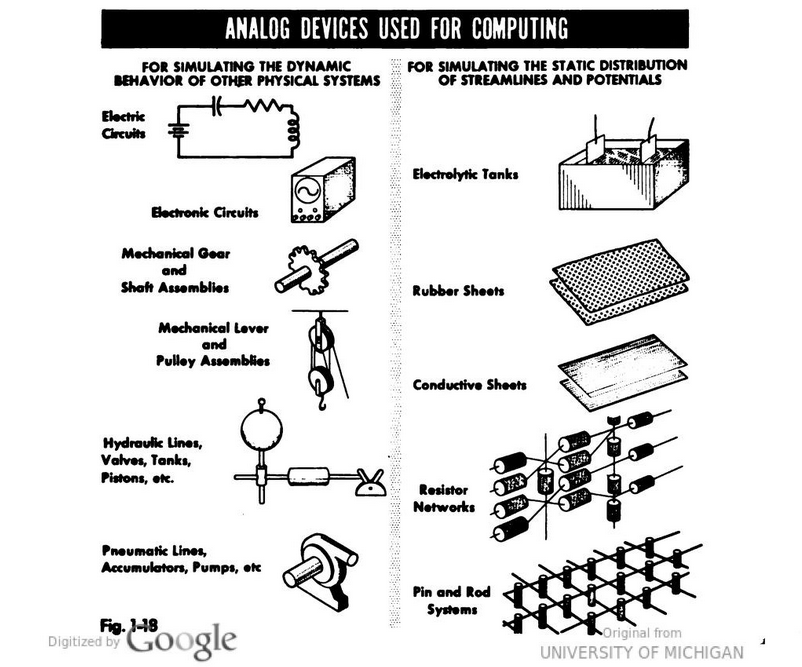

When a digital computer tackles a problem, it follows an algorithm, a finite number of steps that eventually lead to an answer.27 Presenting a problem to an analog computer is a completely different procedure, and this cute diagram from the ‘60s still holds true today:

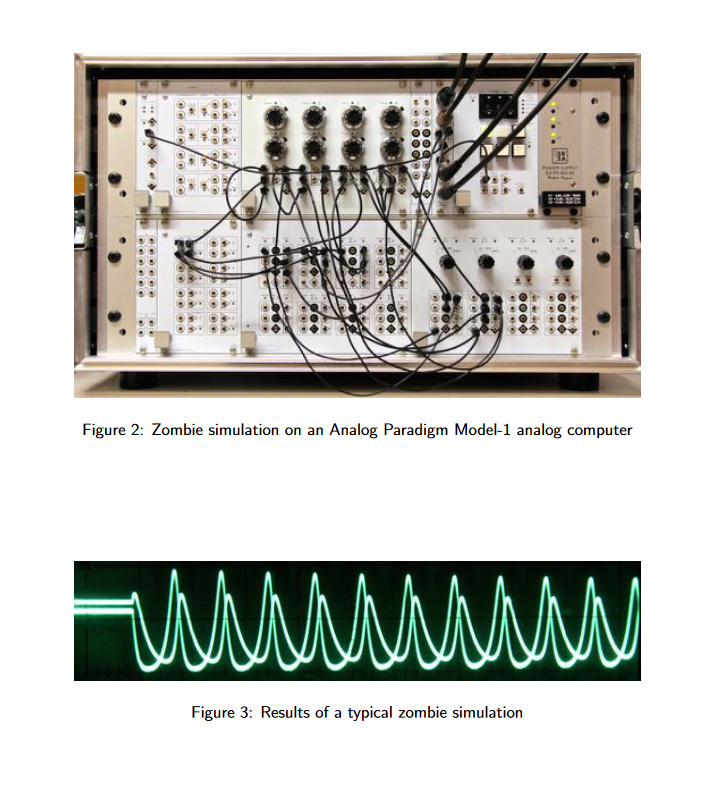

First, you take note of the physical laws that form the context of the problem you’re solving. Then, you create a differential equation that models the problem. If your blood just ran cold at the mention of math, don’t worry. All you need to know is that differential equations model dynamic problems, or problems that involve an element of change. Differential equations can be used to simulate anything from heat flow in a cable to the progression of zombie apocalypses. And analog computers are fantastic at solving them.728

Once you’ve written a differential equation, you program the analog computer by translating each part of the equation into a physical part of the computer setup. And then you get your answer, which doesn’t even necessarily require a monitor to display! 729

All of that might be tough to envision, so here’s another analog analogy that hopefully is less convoluted than the labyrinth of wires that make up a patch panel. Imagine a playground. Let’s say two kids want to race to the same spot, but each one takes a different path. One decides to skip along the hopscotch court, and the other rushes to the slide. Who will win?

These two areas of the playground are like different paradigms of computing. You count the hopscotch spaces outlined on the ground and move between them one by one, but you measure the length of a slide, and reach its end in one smooth move. And between these two methods of reaching the same goal, one is definitely a much quicker process than the other…and also takes a lot less energy.

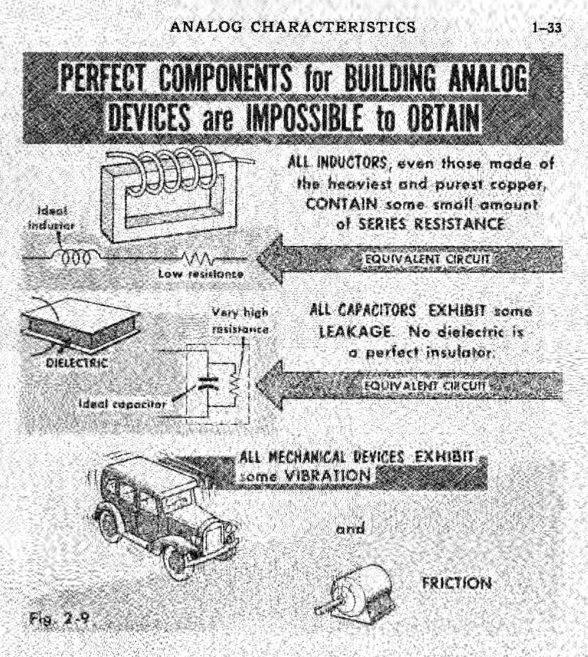

There are, of course, caveats to analog. If you asked the children in our playground example to repeat their race exactly the same way they did the first time, who do you think would be more accurate? Probably the one whose careful steps were marked with neat squares, and whose outcomes will be the same — landing right within that final little perimeter of chalk. With discrete data, you can make perfect copies. It’s much harder to create copies with the more messy nature of continuous data.25 The question is: do we even need 100% accurate calculations? Some researchers are proposing that we don’t, at least not all the time.20

That said, what does this have to do with Amdahl’s law? Well, we can extend our existing scenario a little further. It takes time to remember the rules of hopscotch and then follow them accordingly. But you don’t need to remember any rules to use a slide — other than maybe “wait until there isn’t anybody else on it.” Comment below with your favorite memories of playground slide accidents!

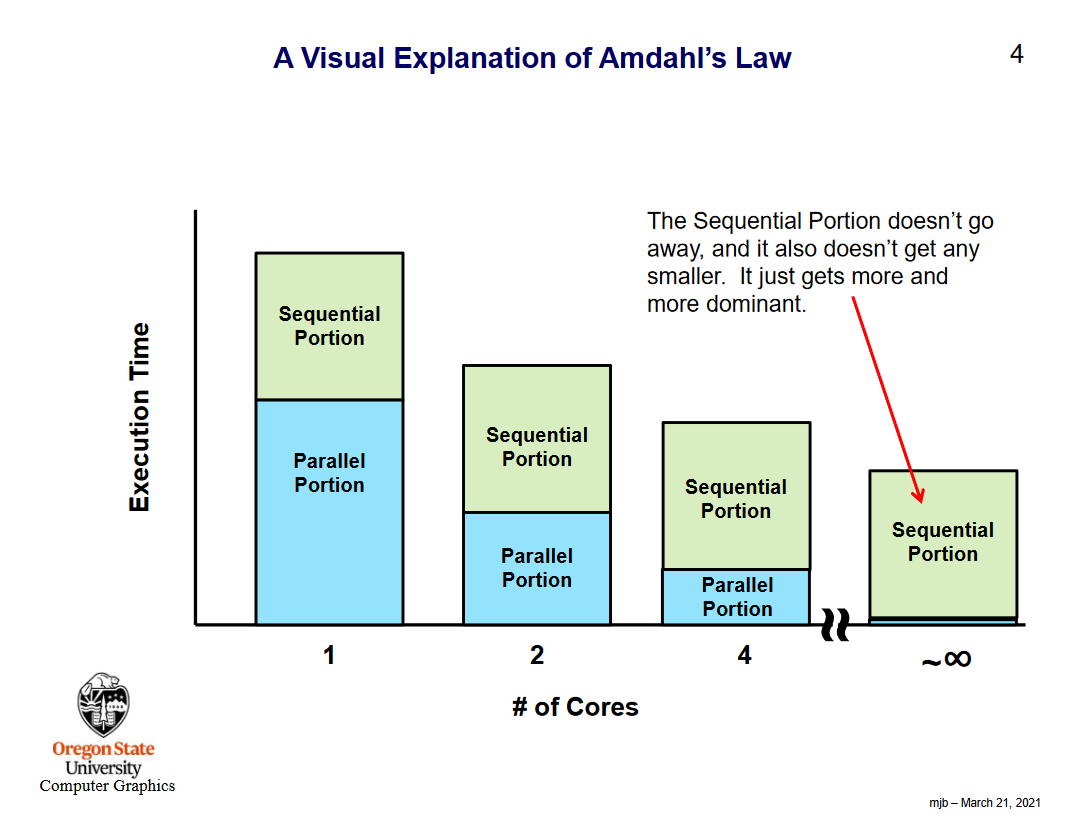

In any case, because digital computers 1. reference their memories and 2. solve problems algorithmically, there will always be operations (like remembering hopscotch rules) that must be performed sequentially. As computer science professor Mike Bailey puts it, “this includes reading data, setting up calculations, control logic, storing results, etc.” And because you can’t get rid of these sequential operations, you run into diminishing returns as you add more and more processors in attempts to speed up your computing.30 You can’t decrease the size of components forever, and you can’t increase the number of processors forever, either.

On the other hand, analog computers don’t typically have memories they need to take time to access. This allows them more flexibility to work in parallel, meaning they can easily break down problems into smaller, more manageable chunks and divide them between processing units without delays.317

Here’s how Bernd Ulmann explains it In his 2023 textbook, Analog and Hybrid Computer Programming, which contributed a considerable amount of research to this video:

“Further, without any memory there is nothing like a critical section, no need to synchronize things, no communications overhead, nothing of the many trifles that haunt traditional parallel digital computers.”7

So, you might be thinking: speedier, more energy-efficient computing sounds great, but what does it have to do with me? Am I going to have to learn how to write differential equations? Will I need to knock down a wall in my office to make room for a retrofuturist analog rig?

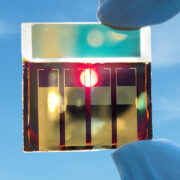

Probably not. Instead, hybrid computers that marry the best features of both digital and analog are what might someday be in vogue. There’s already whisperings of Silicon Valley companies secretly chipping away at…analog chips. Why? To conserve electricity … and cost. The idea is to combine the energy efficiency of analog with the precision of digital. This is especially important for continued development of the power-hungry machine learning that makes generative AI possible. With any hope, that means products that are far less environmentally and financially costly, to maintain.28

And that’s exactly what Mythic, headquartered in the U.S., is aiming for. Mythic claims that its Analog Matrix Processor chip can “deliver the compute resources of a GPU at up to 1/10th the power consumption.”32 Basically, as opposed to storing data in static RAM, which needs an uninterrupted supply of power, the analog chip stores data in flash memory, which doesn’t need power to keep information intact. Rather than 0s and 1s, the data is retained in the form of voltages.28

Where could we someday see analog computing around the house, though? U.S.-based company Aspinity has an answer to that. What it calls the “world’s first fully analog machine learning chip,” the AML100, can act as a low-power sensor for a bunch of applications, according to its website. It can detect a wake word for use in voice-enabled wearables like wireless earbuds or smart watch, listen for the sound of broken glass or smoke alarms, and monitor heart rates, just to name a few.

For those devices that always need to be on, this means energy savings that are nothing to sneeze at (although I guess you could program an AML 100 to alert you whenever somebody sneezes). Aspinity claims that its chip can enable a reduction in power use of 95% or more.33

So, the potential of maximizing efficiency through analog computing is clear, and the world we interact with every day is itself analog. Why shouldn’t our devices be, too? But to say that analog programming appears intimidating (and dated) is…somewhat of an understatement.

It’ll definitely need a bit of an image upgrade to make it approachable and accessible to the public — though there are already models out there that you can fiddle with yourself at home, if you’re brave enough. German company Anabrid, which was founded by Ulmann in 2020, currently offers two: the Analog Paradigm Model-1, and The Analog Thing (or THAT).3435

The Model-1 is intended for more experienced users who are willing to assemble the machine themselves. Each one is produced on demand based on the parts ordered, so you can tailor the modules to your needs.36

THAT, on the other hand…and by THAT I mean THAT: The Analog Thing, is sold fully assembled.37 You could also build your own from scratch — the components and schematics are open source.38

So what do you actually do with the thing? Y’know…THAT? I’ll let the official wiki’s FAQ answer that:

“You can use it to predict in the natural sciences, to control in engineering, to explain in educational settings, to imitate in gaming, or you can use it for the pure joy of it.”39

The THAT model, like any analog computer, solves whatever you can express in a differential equation. As a reminder, that’s basically any scenario involving change, from simulating air flow to solving heat equations.3940 You can also make music!

But as analog computing becomes more readily available, there’s still a lot of work to be done. For one thing, It’ll take effort to engineer seamless connectivity between analog and digital systems, as Ulmann himself points out.7

- ICT Sector Electricity Consumption and Greenhouse Gas Emissions – 2020 Outcome ↩︎

- 15 Crucial Data Center Statistics to Know in 2024 ↩︎

- 2023 Global Data Center Market Comparison ↩︎

- How much electricity does AI consume? ↩︎

- Generative AI’s Energy Problem Today Is Foundational ↩︎

- Opportunities in physical computing driven by analog realization ↩︎

- Analog and Hybrid Computer Programming ↩︎

- Phillips Machine ↩︎

- MONIAC and Bill Phillips ↩︎

- Analog computation, Part 1: What and why ↩︎

- Tide-predicting machine ↩︎

- How To Use a E6B Flight Computer ↩︎

- iPhone Q&A: What processor or processors do the iPhone models use? ↩︎

- The First Moon Landing Was Achieved With Less Computing Power Than a Cell Phone or a Calculator ↩︎

- anabrid mission statement ↩︎

- Moore’s Law ↩︎

- Understanding Moore’s Law ↩︎

- Moore’s Law 40th Anniversary with Gordon Moore ↩︎

- What Is Moore’s Law and Is It Still True? ↩︎

- EnerJ, the Language of Good-Enough Computing ↩︎

- A new front in the water wars: Your internet use ↩︎

- Google’s water use is soaring in The Dalles, records show, with two more data centers to come ↩︎

- Protecting Data Centres from Legionella ↩︎

- Analog Computers May Work Better Using Spin Than Light ↩︎

- Analog and Digital Computers ↩︎

- What is the difference between continuous and discrete random variables? ↩︎

- Algorithm ↩︎

- The Unbelievable Zombie Comeback of Analog Computing ↩︎

- The Analog Thing: First Steps ↩︎

- Parallel Programming: Speedups and Amdahl’s law ↩︎

- What is Parallel Computing? ↩︎

- Overview ↩︎

- Products ↩︎

- Anabrid GmbH ↩︎

- Buy your own “THE ANALOG THING” ↩︎

- Analog Paradigm Model-1 ↩︎

- The Analog Thing ↩︎

- The Analog Thing Specs ↩︎

- The Analog Thing FAQ ↩︎

- Application Notes ↩︎

Comments